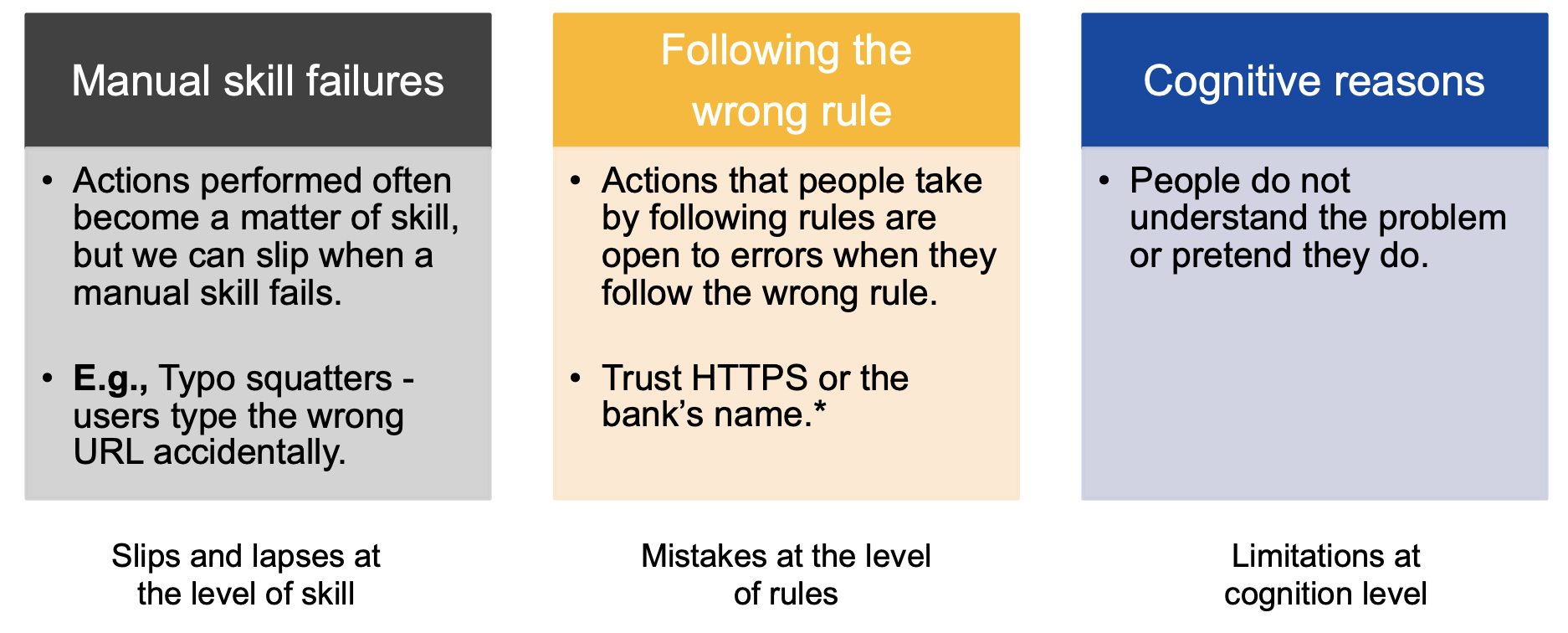

Human errors

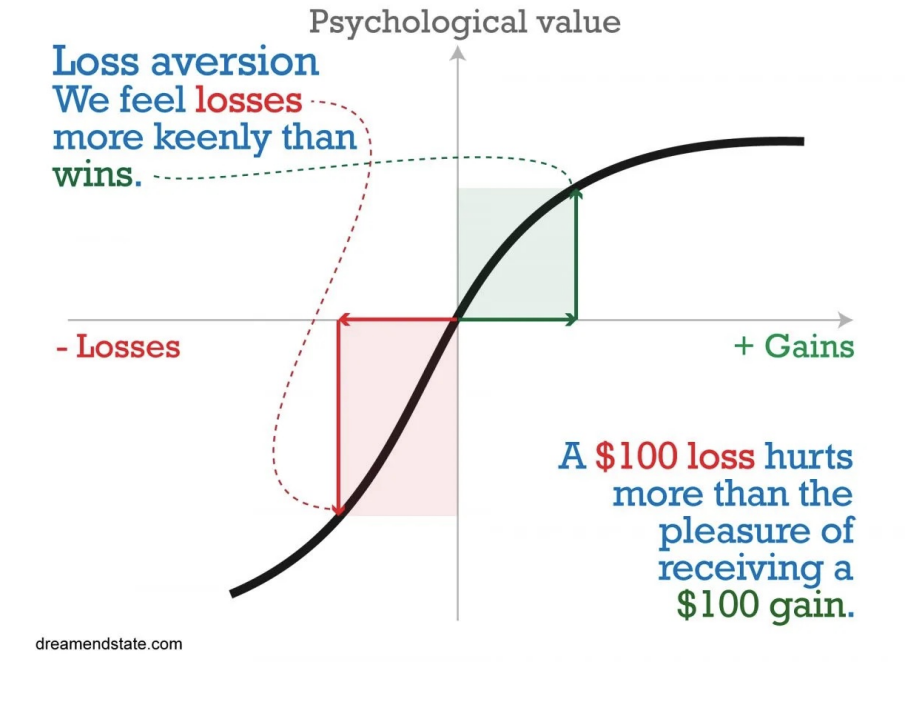

Prospect theory and risk misconception

Prospect theory

“Your PayPal account has been frozen, and you need to click here to unlock it.”

People dislike losing and use all sorts of heuristics to make decisions. People often rely on metal shortcuts or rules of thumb, rather than thorough analysis. Unlike the expected utility theory, which assumes people make rational decisions to maximise utility. Prospect theory acknowledges that people often make irrational choices based on perceived gains and losses.

Risk misconception

If we like an activity, we tend to judge its benefits to be high and its risk to be low. Conversely, if we dislike the activity, we judge it as low-benefit and high-risk.

Anchoring effect

People rely heavily on an initial guess or first piece of information they receive (the “anchor”) when making decisions. They then adjust their judgment based on additional information, but the final decision is often biased towards the initial anchor.

In lottery scams, the promise of a large jackpot serves as an anchor, making a small ‘processing fee’ seem negligible by comparison, leading victims to pay the fee in hopes of claiming the non-existent prize.

Availability heuristic

The availability heuristic is a mental shortcut that people’s tendency to believe that the most easily recalled information is the most relevant or accurate for making future predictions.

Traffic ticket SMS scams by referencing common and memorable traffic violations, prompting recipients to recall their own driving experiences and pay fines without verifying the ticket’s authenticity.

Cognitive bias

Present bias & hyperbolic discounting

The present bias refers to the tendency of people to give stronger weight to payoffs to that are closer to the present time over future benefits, even if the future benefits are larger.

This bias cause people to decline security updates, or ignore privacy policies despite claiming to create about privacy.

Clustering illusion

The clustering illusion is a cognitive bias where people perceive patterns in random data. The human mind tends to find order in data, even when there is none, leading to irrational beliefs.

A famous story is ‘hot hands’ belief in basketball, where people think a player is more likely to score again after just scoring, despite no supporting data. Scammers use this bias in phishing attacks by creating emails that appear legitimate (fake logos, graphics, or URL that resemble those legitimate organisations), making victims believe in a pattern that does not exist.

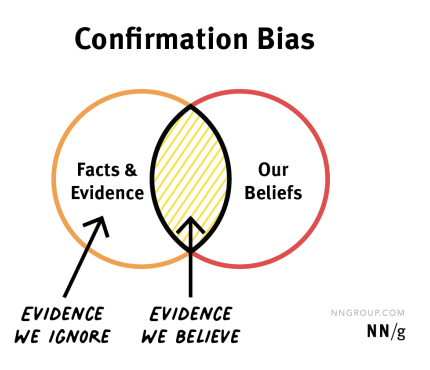

Confirmation bias

Confirmation bias is the tendency of people to seek out, interpret, and remember information that confirms our existing beliefs while ignoring or dismissing information that contradicts them.

Once belief is established, users will find it easier to ignore an inconsistency in data that they are presented with.

Confirmation bias is the tendency of people to seek out, interpret, and remember information that confirms our existing beliefs while ignoring or dismissing information that contradicts them.

Once belief is established, users will find it easier to ignore an inconsistency in data that they are presented with.

Zero-risk bias

Zero-risk bias is where people prefer the complete elimination of a risk in a specific are, even if it means accepting a higher over all risk. For example, in a study, participants were presented with two clean up options for hazardous sites. Once option reduces the total number of cancer ceases by 6, while the other reduces the number by 5 but completely eliminated cases at one site. Despite the first option being more effective overall, many participants preferred the second option because it eliminated the risk at one site.

Decision making bias

Reciprocity

People feel the need to return favours or tend to reciprocate the actions of others, whether positive or negative. This means that if someone does something kind for us, we feel compelled to return the favour, and similarly, if someone treats us poorly, we might respond in kind.

For example, a helpful caller fro the IT department walks to the new employee through software, then asks them to install that ‘new tool that IT is rolling out’. Even through installing new software may be against company policy, there is a chance that the employee will install the tool (malware).

Commitment and consistency

People tend to act in ways that align with previous commitments and beliefs, they suffer cognitive dissonance if they feel they are being inconsistent.

For example, an attacker might call a new employee, explain security policies, and get the employee to commit to following them. Later the attacker asks for the employee’s password to “verify compliance”, exploiting the employee’s desire to be consistent with their earlier commitment.

Social proof/validation

People want the approval of others. Most people tend to comply with something when they see others do it as well.

For example, Caller says the are conducting a survey and gives names of people in the same department who already did it. Victim takes part in the survey and reveals sensitive data.

Like bias

People tend to comply to requests coming from people they like, and most humans have a natural desire to be liked by other as well, and most want to be seem as helpful.

The attacker may claim to have similar interests, beliefs, or attitudes, or they may make compliments.

Respect to authority

People are deferential to authority figures.

For examples, nurses in the hospital received a call asking them to administer medication to a patient - the caller claimed to be a hospital physician.

Scarcity

We are afraid of missing out. The more difficult it is to acquire an item the more we value it.

User conditioning

Automatisms

Users develop automatic responses to certain situations, often without thinking.

For example, users might click away warning dialogues without reading them.

Social engineering

Social engineering is the method to hacking system through the people. Very useful for stealing credentials and identities.

Back to parent page: Cyber Security and Security Engineering

Cyber_Security Human_Factors Cognitive_Bias Social_Engineering