In computer programming, there are different ways of solving a problem, these different ways may have different efficiency. Therefore, we use Big O notation to analyse the performance of an algorithm in terms of the time it takes or the amount of memory it required to do its work.

Time Complexity

Time complexity estimates the efficiency of an algorithm as its input approaches infinity, it describes the amount of time taken to run an algorithm. Since an algorithm’s running time may vary among different inputs of the same size, we commonly considers the worst-case time complexity, which is the maximum amount of time required for inputs of a given size.

Space Complexity

The space complexity of an algorithm is the amount of memory space required to solve an instance of the computational problem as a function of characteristics of the input. We exam space complexity by observing if any new variable has been initialised or new function been added to the call stack etc.

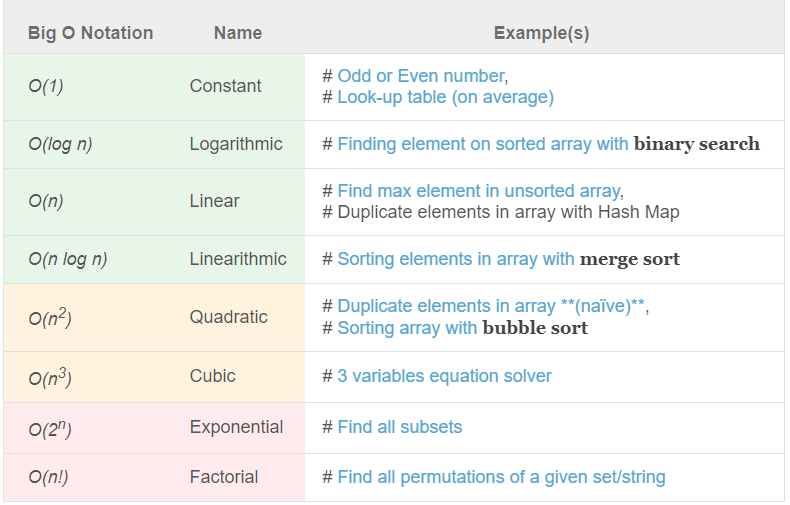

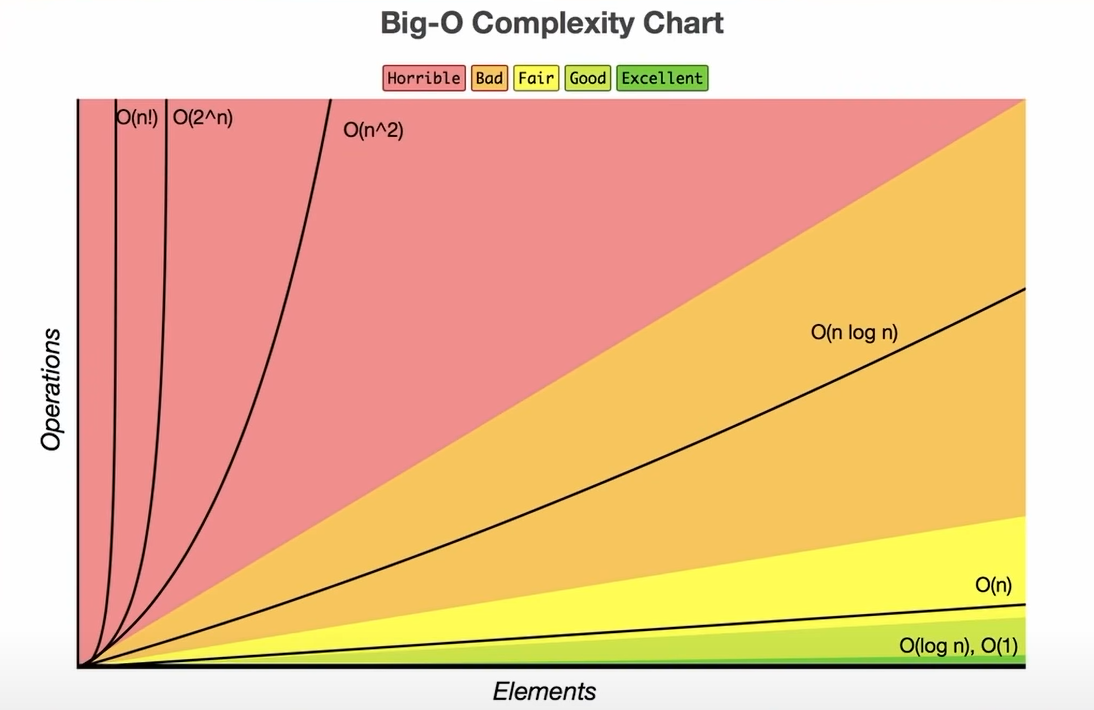

Big O Notation

Time and space complexity is commonly expressed using Big O notation where the n is the input size that influencing algorithm complexities.

Logarithms

According to logarithm rules, an equation , the exponent is set to be the logarithm of the number , which is ; you can also interpret it as raise to what power to get . A real example is , which is 10 raise to what power to get 10,000? The answer is simply 4. In computer science, in most cases, the base of the logarithm is always 2, , where is the size of the input.

Big O vs Big Theta vs Big Omega

Big O notation (O)

It is defined as upper bound and upper bound on an algorithm is the most amount of time required (the worst case performance). Big O notation is used to describe the asymptotic upper bound.

Big Theta notation (Θ)

It is defined as tightest bound and tightest bound is the best of all worst case times that the algorithm can take.

Big Omega notation (Ω)

It is defined as lower bound and lower bound on an algorithm is the least amount of time required (the most efficient way possible, in other words the best case) Just like O notation provide an asymptotic upper bound, omega notation provides asymptotic lower bound.

Time complexity sample cases

You can find more examples for complexity analysis from subsequent pages in Data Structures and Algorithms.

Constant time O(1)

The runtime of the algorithm remains constant, regardless of the input size.

int a = 5;

int b = 10;

int result = a + b;

System.out.println(result);Logarithmic time O(log n)

In computer science, the log base is always 2. For example when computing log28 the base is going to be 2, this expression can also be written as log8, and we can solve this expression by thinking about what power that 2 is raising to obtain 8 (2?=8), and the answer is 3. In terms of algorithm time complexity, O(log n) means that the size is halved each round.

function logn(n) {

while (n > 1) {

n = Math.floor(n/2)

}

}Linear time O(n)

The runtime of the algorithm grows linearly with the input size.

public static void linearTimeAlgorithm(int[] arr) {

for (int i = 0; i < arr.length; i++) { // <- O(n)

System.out.println(arr[i]); // <- O(1)

}

}Linearithmic time O(n log n)

In linearithmic or linear-logarithmic time, the runtime of the algorithm grows in proportion to n multiplied by the logarithm of n. Linear-logarithmic time often appears in nested loops, with the complexities of the two loops being O(log n) and O(n) respectively.

int linearLogRecur(float n) {

if (n <= 1)

return 1;

int count = linearLogRecur(n / 2) + linearLogRecur(n / 2);

// each recursive call has a loop with a complexity of O(n)

for (int i = 0; i < n; i++) {

count++;

}

return count;

}In this example, the number of recursive calls decreases logarithmically with the input size n because the n is halved in each recursive call.

Quadratic time O(nc)

The following code snippet has a time complexity of O(n2). The outer loop runs n times, and for each iteration of the outer loop, the inner loop also runs n times. Therefore, the total number of iterations is n * n = n^2.

int count = 0;

for (int i=0; i<A.length; i++) { // <- O(n)

for (int k=0; j<A.length; j++) { // <- O(n^2)

count++;

}

}The time complexity of a nested for loop depends on the number of iterations of each loop and how they are nested. A standard nested for loop usually has the time complexity of O(n * m) where the n and m each represents the number of iterations of each loop.

A three layered nested for loop where each iteration has the same range n will create a cubic time complexity O(n * n * n) = O(n3).

c layered nested loop will have a time complexity of O(n^c).

Exponential time O(2n)

Exponential time refers to algorithms whose runtime grows exponentially with the size of the input.

public static int fibonacci(int n) {

if (n <= 1)

return n;

else

return fibonacci(n - 1) + fibonacci(n - 2);

}The Fibonacci sequence illustrate time complexity because of the recursive nature of its calculation. The algorithm involve dividing the input n in each recursive call. In each recursive call, the input is decremented by one (n-1) or two (n-2). The number of recursive calls increases exponentially with the input size n. This because the recursive calls branch out into two separate recursive calls n-1 and n-2, leading to exponential growth in number of function calls.

Back to parent node: Data Structures and Algorithms

data_structure_and_algorithm algorithm_complexity

Reference: